The default mental image of video compression involves unwanted video artifacts, like pixelation and blockiness in the image. This sells short, though, the complexity that actually goes into compressing video content. In particular, it overlooks a fascinating process called interframe, which involves keyframes and delta frames to intelligently compress content in a manner that is intended to go unnoticed.

This article describes this process in detail, while also giving best practices and ideal encoder settings that you can apply for use with your live streaming platform.

- Understanding video frames

- An opportunity to compress: interframe

- What is a keyframe?

- How do p-frames work?

- What are b-frames and how do they differ from p-frames?

- How do you set a keyframe?

- Choosing a keyframe interval at the encoder Level

- Relationship between keyframes and bitrates

- What’s the best setting for a keyframe Interval?

- What’s an IDR-frame?

- Should someone use an “auto” keyframe setting?

- Perfecting a keyframe strategy

Understanding video frames

There are a lot of terms and aspects of streaming technology that can be taken for granted. As someone matures as a broadcaster, it pays to understand elements in greater detail to learn why a process is done and also optimal settings.

For example, a keyframe is something a few broadcasters have seen mentioned before, or saw the setting in an encoder like Wirecast, without quite realizing what it is and how beneficial this process is for streaming. A keyframe is an important element, but really only part of a longer process that helps to reduce the bandwidth required for video. To understand this relation, one first needs to understand video frames.

Starting at a high level, most probably realize that video content is made up of a series of frames. Usually denoted as FPS (frames per second), each frame is a still image that when played in sequence creates a moving picture. So content created that uses a FPS of 30 means there are 30 “still images” that will play for every second of video.

An opportunity to compress: interframe

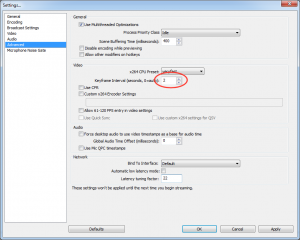

On an average video, if someone were to take 90 consecutive frames and spread them out they will see a lot elements that are pretty much identical. For example, if someone is talking while standing next to a motionless plant it’s unlikely that information related to that plant will change. As a result, that’s a lot of wasted bandwidth used just to convey that something hasn’t changed.

Consequently, when looking for effective ways to compress video content, frame management became one of the cornerstone principles. So if that plant in the example is not going to change, why not just keep using the same elements in some of the subsequent frames to reduce space?

Consequently, when looking for effective ways to compress video content, frame management became one of the cornerstone principles. So if that plant in the example is not going to change, why not just keep using the same elements in some of the subsequent frames to reduce space?

This realization gave birth to the idea of interframe prediction. This is a video compression technique that divides frames into macroblocks and then looks for redundancies between blocks. This process works through using keyframes, also known as an i-frame or Intra frame, and delta frames, which only store changes in the image to reduce redundant information. These collection of frames are often referred to by the rather non-technical sounding name of a “group of pictures”, abbreviated as GOP. A video codec, used for encoding or decoding a digital data stream, all have some form of interframe management. H.264, MPEG-2 and MPEG-4 all use a three frame approach that includes: keyframes, p-frames, and b-frames.

What is a keyframe?

The keyframe (i-frame) is the full frame of the image in a video. Subsequent frames, the delta frames, only contain the information that has changed. Keyframes will appear multiple times within a stream, depending on how it was created or how it’s being streamed.

If someone were to Google “keyframe”, they are likely to find some results related to animation and video editing. In this instance, we are using the word keyframe in how it relates to video compression and its relationship to delta frames.

How do p-frames work?

Also know as predictive frames or predicted frames, the p-frame follows another frame and only contain part of the image in a video. It is classified as a delta frame for this reason. P-frames look backwards to a previous p-frame or keyframe (i-frame) for redundancies. The amount of image presented in the p-frame depends on the amount of new information contained between frames.

For example, someone talking to the camera in front of a static background will likely only contain information related to their movement. However, someone running across a field as the camera pans will have a great deal more information with each p-frame to match both their movement and the changing background.

What are b-frames and how do they differ from p-frames?

Also known as bi-directional predicted frames, the b-frames follow another frame and only contain part of the image in a video. The amount of image contained in the b-frame depends on the amount of new information between frames.

Unlike p-frames, b-frames can look backward and forward to a previous or later p-frame or keyframe (i-frame) for redundancies. This makes b-frames more efficient as opposed to p-frames, as they are more likely to find redundancies. However, b-frames are not used when the encoding profile is set to baseline inside the encoder. This means the encoder has to be set at an encoding profile above baseline, such as “main” or “high”.

How do you set a keyframe?

In regards to video compression for live streaming, a keyframe is set inside the encoder. This is configured by an option sometimes called a “keyframe interval” inside the encoder.

The keyframe interval controls how often a keyframe (i-frame) is created in the video. The higher the keyframe interval, generally the more compression that is being applied to the content, although that doesn’t mean a noticeable reduction in quality. For an example of how keyframe intervals work, if your interval is set to every 2 seconds, and your frame rate is 30 frames per second, this would mean roughly every 60 frames a keyframe is produced.

The term “keyframe interval” is not universal and most encoders have their own term for this. Adobe Flash Media Live Encoder (FMLE) and vMix, for example, uses the term “keyframe frequency” to describe this process. Other programs and services might call the interval the “GOP size” or “GOP length”, going back to the “Group of Pictures” abbreviation.

Choosing a keyframe interval at the encoder level

In terms of setting a keyframe interval, it varies from encoder to encoder.

For FMLE, this option, denoted as “Keyframe Frequency”, is found in the software encoder by clicking the wrench icon to the right of format.

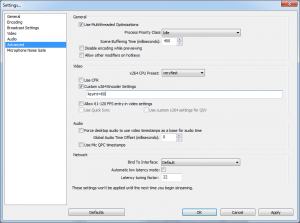

In Wirecast, this is set from the Encoder Presets menu and the option is called “key frame every”. Wirecast is different as the interval is actually denoted in frames. So for a 30 FPS broadcast, setting the “key frame every” 60 frames would roughly give a keyframe interval of 2 seconds, as you have 30 frames every second.

For the vMix encoder, one needs to first click the gear icon near streaming, which opens the Streaming Settings. Near the quality option here is another gear icon and clicking this will open up a menu that has the ability to modify the “Keyframe Frequency”.

In Open Broadcast Software (OBS), for versions after v0.55b, the keyframe interval can be set in the Settings area under Advanced. For versions of OBS before v0.542b, it’s not very clear how to modify the keyframe interval, but this is actually a component of Settings. Once there, go to Advanced and then select “Custom x264 Encoder Settings”. In this field one needs to enter in the following string: “keyint=XX” with the XX being the number of frames until a keyframe is triggered. Like Wirecast, if a keyframe interval of 2 seconds is desired and the FPS is 30 seconds enter the following: “keyint=60”.

For XSplit, keyframe interval is a component of the channel properties. Under the Video Encoding area, one will find a listing that says “Keyframe Interval (secs)”. To the far right of this is a gear icon. Clicking the gear will launch a “Video Encoding Setup” popup. This will allow someone to specify the keyframe interval in seconds.

Relationship between keyframes and bitrates

Mileage in this explanation might vary, as encoders do manage bitrates and keyframes differently. Using an encoder like Wirecast, one might notice that broadcasting someone talking against a still background has “higher quality” compared to broadcasting someone jumping up and down against a moving background. This can be reproduced when using the same exact average bitrate and keyframe interval between them. The reason for this is because, in part, due to the fact that the delta frames have a ton of information to share in the jumping example. There is very little redundancy, meaning a lot more data that needs to be conveyed on each delta frame.

If you have an encoder like Wirecast, though, it’s trying its hardest to keep the stream around that average bitrate that was selected. Consequently, the added bandwidth that is needed for the additional information contained in the delta frames results in the quality being reduced to try and keep the average bitrate around the same level.

What’s the best setting for a keyframe interval?

There has never been an industry standard, although 10 seconds is often mentioned as a good keyframe interval, even though that’s no longer suggested for streaming. The reason it was suggested is because, for a standard 29.97 FPS file, the resulting content is responsive enough to support easy navigation from a preview slider. To explain more, a player can not start playback on a p-frame or b-frame. So using the 10 second example, if someone tried to navigate to a point that was 5 seconds into feed it would actually shift 5 seconds back to the nearest keyframe and begin playback. This was considered a good trade off for smaller bandwidth consumption, although for reference DVDs elected to use something much smaller than 10 seconds.

However, for live streaming, the recommended level has drastically dropped. The reason for this is the advent of adaptive bitrate streaming. For those unfamiliar with adaptive streaming, this technology enables a video player to dynamically change between available resolutions and/or bitates based upon the viewer trying to watch. So someone with a slower download speed will be given a lower bitrate version, if available. Other criteria, like playback window size, will also impact what bitrate is given.

True adaptive streaming doesn’t just make this check when the video content initially loads, though, but can also alter the bitrate based on changes on the viewer’s side. For example, if a viewer was to move out of range of a Wi-Fi network on their mobile, they will start using their normal cellular service which is liable to result in a slower download speed. As a result, the viewer might be trying to watch content that is too high of a bitrate versus their download speed. The adaptive streaming technology should realize this discrepancy and make the switch to a different bitrate.

True adaptive streaming doesn’t just make this check when the video content initially loads, though, but can also alter the bitrate based on changes on the viewer’s side. For example, if a viewer was to move out of range of a Wi-Fi network on their mobile, they will start using their normal cellular service which is liable to result in a slower download speed. As a result, the viewer might be trying to watch content that is too high of a bitrate versus their download speed. The adaptive streaming technology should realize this discrepancy and make the switch to a different bitrate.

The keyframe interval comes into action here as making that switch occurs during the next keyframe. So if someone is broadcasting with a 10 second interval, that means it could take up to 10 seconds before the bitrate and resolution might change. That length of time means the content might buffer on the viewer’s side before the change occurs, something that could lead to viewer abandonment.

Because of this, it’s recommended to have your keyframe interval set at 2 seconds for live streaming. This produces a result where the video track can effectively change bitrates often before the user might experience buffering due to a degradation in their download speed.

What’s an IDR-frame?

We are looping at this point, but it pays to understand p-frames, b-frames and get a crash course in adaptive streaming before talking about what is an IDR-frame, or Instantaneous Decode Refresh frame. These are actually keyframes and each keyframe can either be IDR based or non-IDR based. The difference between the two is that the IDR based keyframe works as a hard stop. An IDR-frame prevents p-frames and b-frames from referencing frames that occurred before the IDR-frame. A non-IDR keyframe will allow those frames to look further back for redundancies.

On paper, a non-IDR keyframe sounds ideal: it can greatly reduce file size by being allowed to look at a much larger sample of frames for redundancies. Unfortunately, a lot of issues arise with navigation and the feature does not play nicely with adaptive streaming. For navigation, let’s say someone starts watching 6 minutes into a stream. That’s going to cause issues as the p-frames and b-frames might be referencing information that was never actually accessed by the viewer. For adaptive streaming, a similar issue can arise if the bitrate and resolution are changed. This is because the new selection might reference data that the viewer watched at a different quality setting and is no longer parallel. For these reasons, it’s always recommended to make keyframes IDR based.

Generally, encoders will either provide the option to turn on or off IDR based keyframes or won’t give the option at all. For those encoders that do not give the option, it’s almost assured to be because the encoder is setup to only use IDR-frames.

Should someone use an “auto” keyframe setting?

In short: no.

Auto keyframe settings, in principal, are pretty great. They will manually force a keyframe during a scene change. For example, if you switch from a PowerPoint slide to an image of someone talking in front of a camera that would force a new keyframe. That’s desirable as the delta frames would not have much to work with, unable to find redundancies between the PowerPoint slide and the image from the camera.

Unfortunately, this process does not work with some adaptive streaming technologies, most notably HLS. The HLS process requires the keyframes to be predictable and in sync. Using an “auto” setting will create variable intervals between keyframes. For example, the time between keyframes might be 7 seconds and then later it might be 2 seconds if a scene change occurs quickly.

For most encoders, to disable “auto change” or “scene change detect” features this often just means denoting a keyframe interval. For example, in OBS if a keyframe interval is set at 0 seconds then the auto feature will kick in. Placing any number in there, like 1 or 2, will disable the auto feature.

If the encoder, like Wirecast, has an option for “keyframe alignment”, it should be known that this is not the same process. Having keyframes aligned is a process for creating specific timestamps and is best suited for keeping multiple bitrates that the broadcaster is sending through the encoder in sync.

Perfecting a keyframe strategy

With the advent of adaptive bitrates, the industry is at an odd juncture where there is a pretty clear answer on best practices for keyframes and live streaming. That strategy includes:

- Setting a keyframe interval at around 2 seconds

- Disabling any “auto” keyframe features

- Utilizing IDR based keyframes

- Using an encoding profile higher than baseline to allow for b-frames

This strategy allows for easy navigation of content, for on demand viewing after a broadcast, while still reaping the benefits of frame management and saving bandwidth on reducing redundancies. It also supports adaptive btirate streaming, an important element of a successful live broadcast and being able to support viewers with slower connections.

Please contact us for more questions on interframe and how IBM Watson media can help you deliver high quality video alongside lower bitrate options through cloud transcoding.

Disclaimer: This article is aimed at helping out live broadcasters or at least those who plan for a healthy video on demand strategy over streaming. The answer to many of these questions would of course be different depending on playback method. For example, for the intention of creating video content that might be played via a video file, the “scene change” option is just one example of something that would be ideal. Some of these techniques only becomes undesirable in relation to streaming when using adaptive technology.