79% of executives with video content archives agree that a “frustration of using on-demand video is not being able to quickly find the piece of information I am looking for when I need it.” This data comes from a joint IBM and Wainhouse Research report, which interviewed 1,801 executives. The frustration is a growing challenge, but one that can be addressed while simultaneously adding valuable captions to video content. This is achieved through automatic closed captioning, with support to both edit and search them.

Called Watson Captioning, available as part of IBM Video Streaming and as a stand alone service, the process works by utilizing IBM Watson to generate closed captions, converting video speech to text. Once generated, content owners can modify these for accuracy through a dashboard editor, simplified by confidence levels that will underline words under a certain threshold. Afterwards, these corrected captions are not only accessible in the player but can be searched against as well, empowering viewers to jump to parts in the video where their topic of interest is mentioned.

This article discusses, briefly, the process of generating these captions before detailing the user experience in searching them and how content owners can edit them as well.

Automatic closed captioning

Generating captions with machine learning is a quick process, taking roughly the length of the asset to produce. Initiating the process requires either selecting a language for the video or channel, as noted in this Watson Generated Captions guide. Supported languages include: Arabic, Chinese, English (UK or US), French, Japanese, Portuguese and Spanish.

The accuracy of the captions depends on a variety of variables. First is the clarity of the dialogue. Optimal conditions involve content with one speaker who is talking at a normal pace without background noise. Multiple speakers, overt accents, slurred pronunciation and amble background noise, be it a soundtrack or just general noise, can all impact the accuracy of the captions generated. Low quality audio can also negatively impact this, although this should just affect content that was overly compressed.

In addition, Watson can only transcribe words that it’s familiar with. It will determine the most appropriate result for words and phrases, but can misinterpret both names and industry specific terms. Watson will use the entire video to generate captions as well, improving accuracy. For example, Watson might transcribe someone as saying they “found a really good sale”, but context later talks about boats and so the phrase could be corrected to state they “found a really good sail”. This aspect and overall accuracy can be improved by training Watson for specific content as well. Contact IBM sales to learn more about this optional service.

Searching captions

Once the captions are generated, users can start to search them. For internal content, this includes as part of the portal experience to turn up relevant videos through an enterprise video search that looks across the transcript. However, finding a video can sometimes only be part of the equation. For example, an hour video coming up in search results can be daunting for the viewer if they don’t know when the relevant information is introduced.

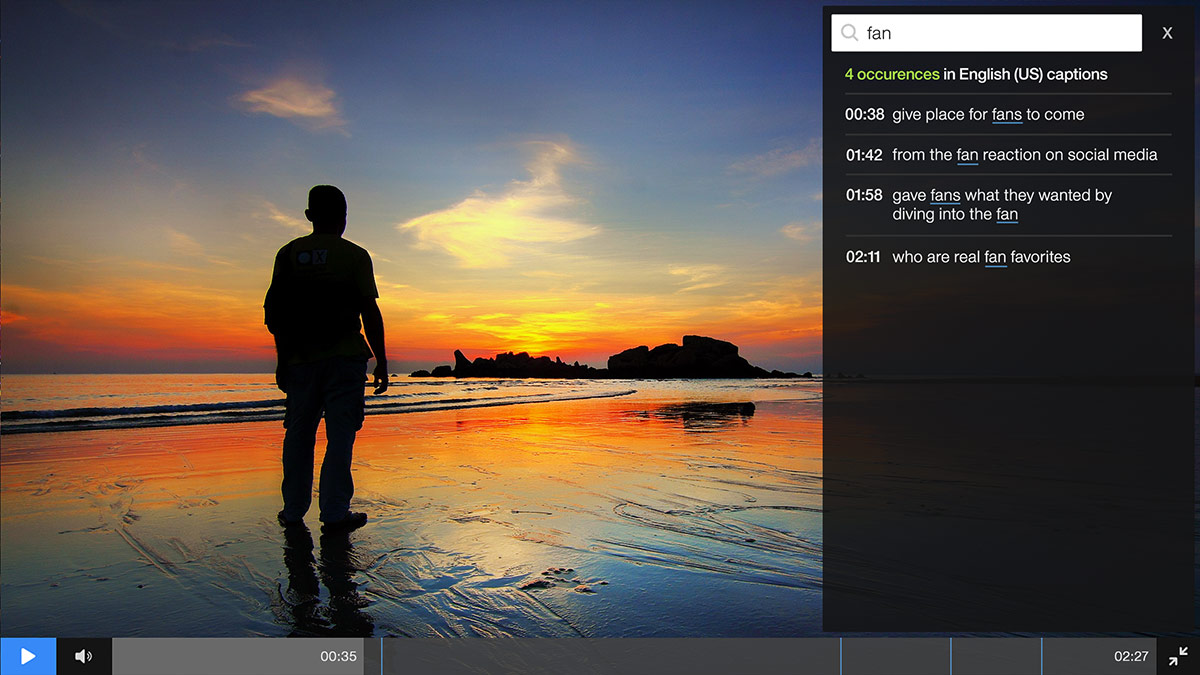

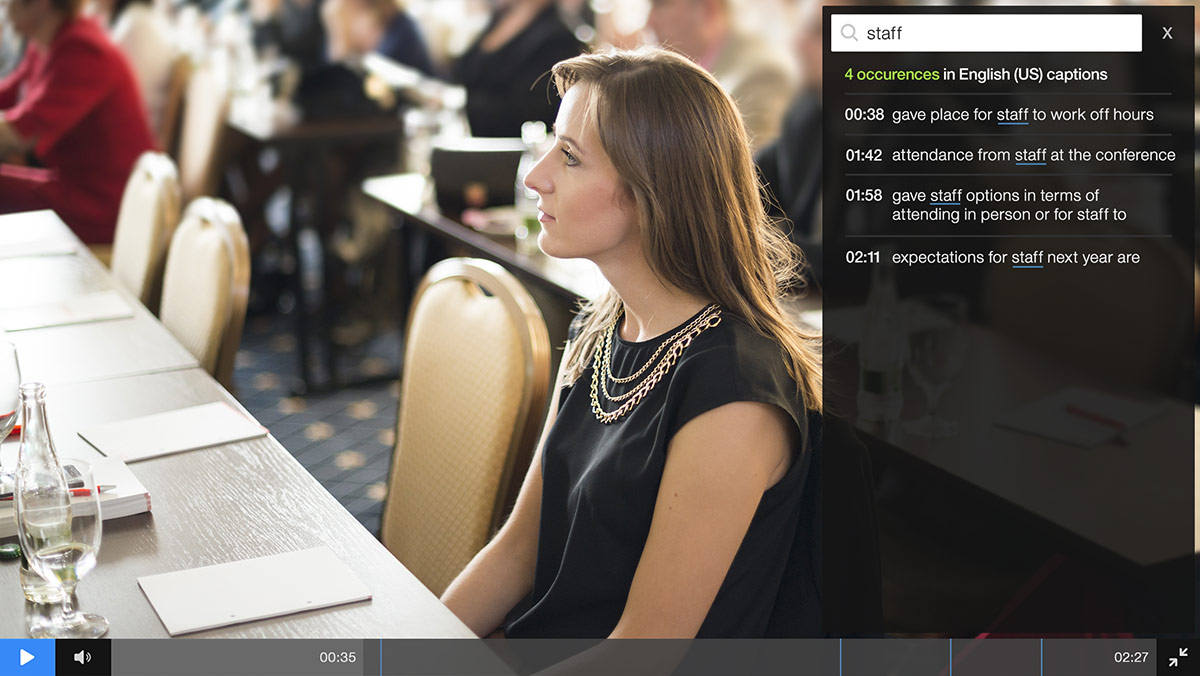

As a result, IBM Watson Media has expanded its capabilities around searching captions by introducing it inside the player. This new search feature allows features to type in terms and locate specific instances where the word was mentioned. Included with each is a timestamp but, more conveniently, the ability to click a result in the player and jump to that part in the video. Consequently, viewers can now quickly skim through long assets, being assured that the information they seek is contained inside and jump to the most relevant moments.

The search results are also flexible. For example, if a viewer searches for “fan” it would produce a list of results that include both singular, “fan”, and plural, “fans”, versions.

Below is a live example of this search feature, using a case study video of USA Network’s Mr. Robot. Click the magnify glass icon in the upper right of the player to begin the search.

This search capability makes content more accessible, while also increasing the importance of closed captions. As a result, it’s more crucial than ever to have the ability to go in and edit captions to ensure accuracy. It should be noted that the search icon will also only appear if the video has captions available. So if no captions are present or they have all been unpublished the search will not display.

How to edit captions

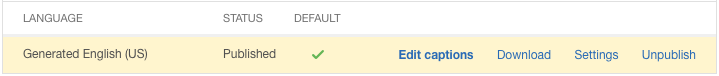

Once generated by Watson, the captions can be modified in the dashboard through an online editor. This is done through selecting the video and going to “Closed Captions”. By highlights the generated captions, the option to edit them will appear.

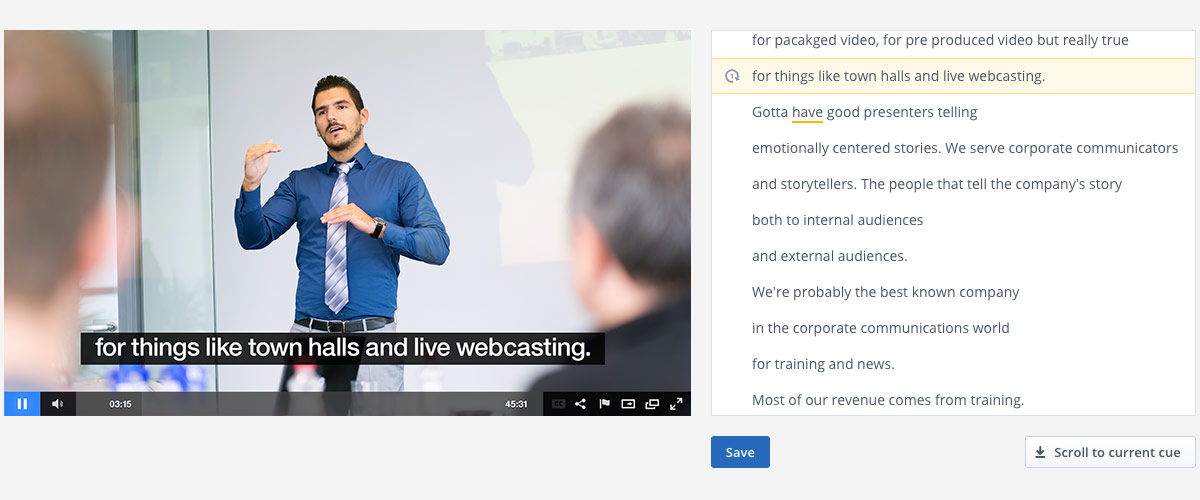

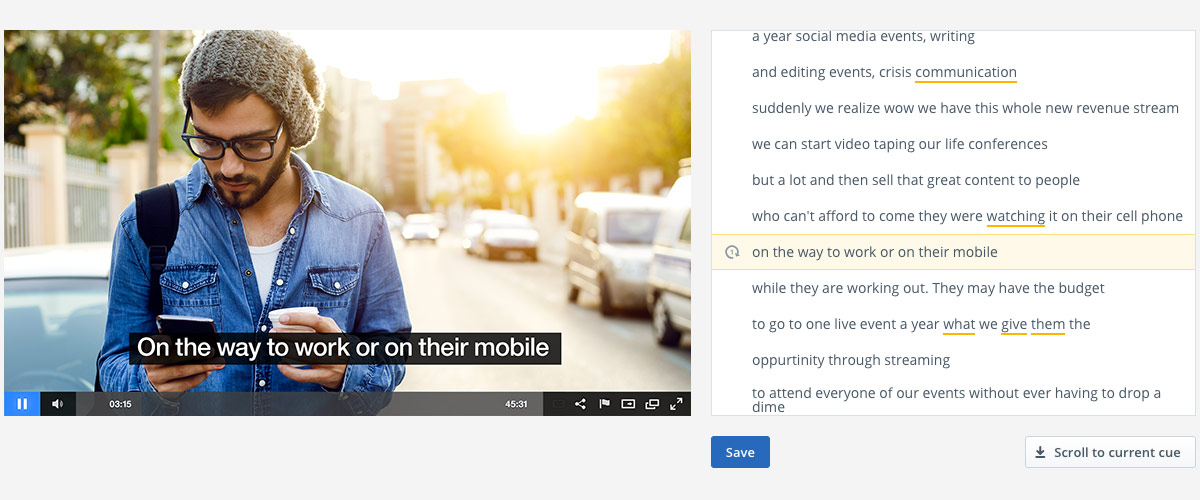

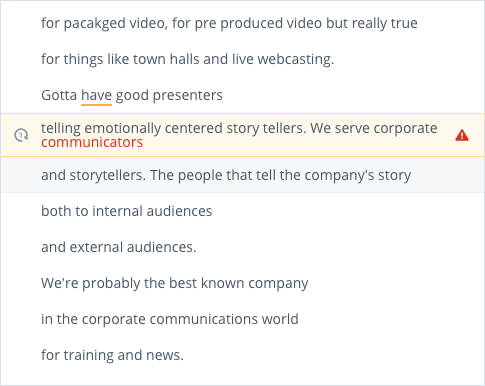

Once clicked, the editor will launch, giving the ability to quickly manipulate the produced captions. To aid in this, words with a lower confidence level are underlined. As a result, editing the captions can be a more skim friendly process, with more time devoted to phrases that are more likely to be incorrect.

When clicking on a phrase, the player will jump to that point in the video. This makes it easy to hear the audio to verify accuracy. If a change is required, simply clicking on a word will allow one to edit it as needed. Content owners can limit these edits to changing the desired word, to even removing the entire phrase and starting over if desired. Once an edit is started, the player will pause, giving the content owner a chance to finish the change before progressing to the next phrase. Changes are also showcased in the player in real time, meaning that alterations can be spot checked quickly after being implemented.

Note that once an underline word is clicked on, the underline is removed. This is done to help speed up the validation process, removing terms that have ideally been checked for accuracy. Meaning that ideally someone clicked, checked that it was accurate and moved on. As a result, it’s ill advised to click on word, hear it’s not accurate and “save fixing it for later”. If this type of approach is desired, though, someone can always choose not to save the edited captions when they are done.

Additional editor features

A few extra bells and whistles are also located in the editor. These are small in scope, but built around making the editing process faster.

Repeat function

Repeat function

To the left of a caption phrase is a repeat button, which is an arrow in a circle around the number 1. When clicked the video will jump to that part of the content and play once, stopping at the end of the phrase. The button can be clicked again to repeat the segment. This is different from normal playback, which will otherwise continue automatically to the next phrase.

Length warning

If a caption is too long, a warning message will appear. This will denote when a caption’s length has reached a point where it will be truncated. Any words that will be cut off appear in red in the caption phrase.

Auto capitalization

Another small feature is the recognition of a period. Once added, the next word is automatically capitalized.

Spell check

When editing captions, the editor can utilize the browser’s built in spell check as well. So, for example, on Google Chrome words that are spelled incorrectly will display with a redline underneath them. A right click on these words will then present correctly spelled alternatives.

Removal of incorrect spaces

When editing, one might accidentally add in an inappropriate space, for example in between a word and a period. In these instances the space will be removed.

Shift caption to previous line

Another trick can be to shift a word to a previous line by going to the first word and clicking backspace on a PC or delete on a Mac. This will shift that word to the line before. This can be helpful if you start to add text to the captions and they begin to exceed the display limit, as you can start to shift the words this one. Additionally, once a word is shifted the content will, naturally, line up so that it’s still in sync.

Summary

Closed captions are very valuable. Studies, such as the one by Facebook stating that 85% of video content on the platform is watched muted, show changing viewing habits that make them even more crucial, while increasing regulations are prompting more and more companies to pursue them.

Thanks to the addition of search, these captions are even more valuable, aiding in improving access to relevant assets. Furthermore, the ability to edit them, in combination with automatic closed captioning, offers a faster way to produce accurate captions for these search results.

Looking for closed captioning software that can automate the captioning process? Contact us to learn more about leveraging an AI-driven process for caption generation.