Curious on adding text to your video content? Unsure on if you should do closed captions or subtitles, or even what the differences are between them? This article discusses what are subtitles and compares closed captioning vs subtitles to assist you on which to go with and why.

If you are curious on technology around automating closed caption generation, register for this How to Add Closed Captions Powered by IBM Watson webinar.

- What are subtitles?

- What are closed captions?

- What’s the difference between closed captions and subtitles?

- Which is better: closed captions or subtitles?

What are subtitles?

Subtitles are a text version of dialogue spoken during video content. That video content can range from television, movies or online streaming sources.

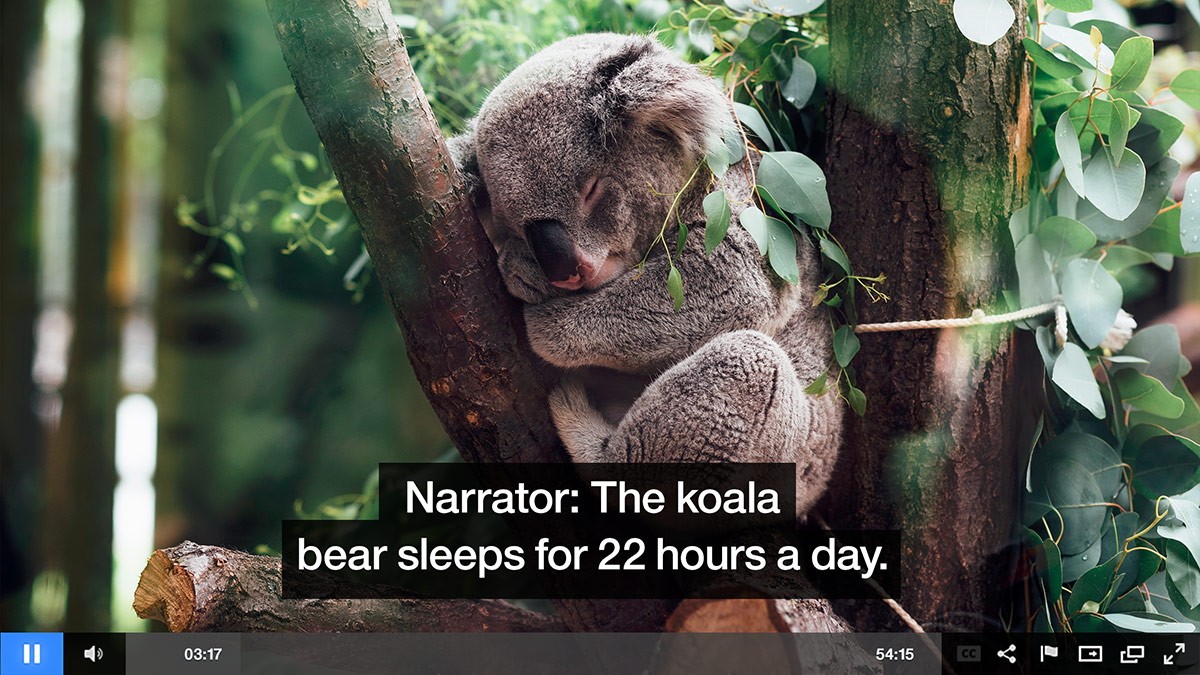

In terms of appearance, subtitles will appear onscreen with this text information. Generally this is found in the lower middle portion of the screen, as seen in the image below. However, the subtitles can appear in any portion of the screen. Sometimes, if key visual information is found in the lower thirds, they might appear at the top for example.

A common use case for subtitles is to offer a version that is in a different language from the original source. For example, a movie might be in Japanese in terms of dialogue, but the subtitles could be in English. Often times referred to as a subtitle track, a video source can contain multiple subtitles. For example, that same movie might be available with subtitles in Japanese, English, Spanish and French. If the subtitles are the same language as the source material, they are sometimes referred to as a transcript. If they are different language, they are noted as being a translation. In the case of the latter, subtitles might also extend to translate onscreen text. For example, in that same movie, if a sign appears on screen in Japanese the subtitles might translate the copy into English.

Subtitles can be burned into the video track, meaning they can not be removed, or can be toggled on or off. This was commonly seen on older mediums, like subtitle translations were often burned into the video track for VHS tapes.

What are closed captions?

Sometimes abbreviated as CC, closed captioning was developed to aid those who might be deaf or hard of hearing to enjoy video content. The captions transcribe dialogue and events into text which can appear onscreen. By definition, the “closed” part of closed captions refers to being able to hide them. If the captions are always visible, they are actually referred to as “open captions”.

On TV sets, a decoder is required to see be able to select the closed captions from televised content. As it relates to the United States, all televisions built after 1990 and that are larger than 13 inches have built-in decoder circuitry that is designed to be able to display closed captioned television transmissions. This is thanks to the Television Decoder Circuitry Act. Some mediums have the captions built in, though. DVDs and Blu-rays are two examples where the subtitles are contained on the medium and selected from a remote or player. For online sources, these are selectable from inside the video player. For more information on closed captions, please read our What is Closed Captioning article.

What’s the difference between closed captions and subtitles?

Both closed captions and subtitles display text on the screen. The difference comes into the level of detail being displayed as part of that text.

Subtitles transcribe dialogue, and aren’t necessarily created with the intent of helping deaf or hard of hearing people. In fact, as mentioned, the common use case for subtitles is to translate dialogue across languages. Subtitles can be in the same language as the content, though, in which case how do they differ from closed captions? The answer is that closed captions provide more information beyond spoken dialogue. For example they might mention that the “[door slams]” to signify an audio noise or cite that “[eerie music starts]” to note the soundtrack. These cues are intended to give context for someone who would normally be unable to hear them.

Closed captions may also specify who is talking. For example, if characters off screen are talking, it might state:

“Kayla: How long has that been in the office?

Brancen: As long as I have been here.”

While an audience without hearing issues can pick up on the voices to tell which is talking, someone who has severe hearing loss can not. Consequently, crediting the dialogue to the individual will give them key information that they would otherwise lose out on.

Furthermore, most regulations are related to closed captions. While some might pass off subtitles as closed captions, there are technical differences between the two where as one should contain more information.

Which is better: closed captions or subtitles?

Subtitles are slightly easier to create, as they contain less information. With content run through speech to text applications, they can also be near ready for subtitles, although should be proofed for accuracy first.

That said, if ease of creation is not a deciding factor, closed captions are better. They serve similar functions, but one provides more information that thereby better assists the deaf or those hard of hearing. The audience size that benefits from this can be substantial, as well. Not surprisingly, these figures skew to have a larger impact the older the audience is. In fact, a study shows hearing loss as high as 30% in those over the age of 60, 14.6% in those ages 41-59 and 7.4% in those ages 29-40.

As a result, since closed captions directly address the needs of this audience, where as subtitles partially do, closed captions are the preferred method. Furthermore, those industries that might be beholden to regulations should make a concentrated effort to do closed captions. While some might pass off subtitles as captions, and for a lot of content it might provide most of the context someone who is deaf or hard of hearing might need, keep in mind accuracy can be important. For example, Harvard and MIT were sued for not providing captions on some content and for “inaccurately captioned or was unintelligibly captioned” content as well.

Summary

Both subtitles and closed captions provide a great, ancillary service to video content. In fact, there is no reason the two can’t be mixed as well, providing closed captions that transcribe dialogue and relate action while also offering subtitle tracks that translate the dialogue into different languages.

The general take away, though, is that subtitles are strictly for dialogue or to translate onscreen text. Closed captions include dialogue but also additional data to assist the deaf or hard of hearing, such as cues for what is happening on screen or to credit dialogue to a person saying it.

Looking to add closed captions to your video content? IBM Watson Media has been working to automate this process, having solutions both built into IBM’s video streaming offerings as well as in a standalone solution called IBM Watson Captioning. To learn more, download this white paper on Captioning Goes Cognitive: A New Approach to an Old Challenge.

If you prefer manual efforts for captioning and are looking for file formats, WebVTT can be a way to achieve this for on-demand content, while CEA-608 captions can do this for live content. Both can be used with IBM’s video streaming offerings.